New deep learning method boosts MRI results without requiring clean training data

Ulugbek Kamilov, Hongyu An lead team combining deep learning, radiology expertise

When patients undergo an MRI, they are told to lie still because even the slightest movement compromises the quality of the images and can create blurred spots and speckles known as artifacts. Moreover, a long acquisition time is usually required to provide high-quality MRI images. A team of researchers from Washington University in St. Louis has found a new deep-learning method that can minimize artifacts and other noise in MRI images that come from movement and a short image-acquisition time.

Hongyu An, a professor of radiology at the School of Medicine’s Mallinckrodt Institute of Radiology (MIR), and Ulugbek Kamilov, assistant professor of computer science & engineering and of electrical & systems engineering in the McKelvey School of Engineering, led an interdisciplinary team that developed the Phase2Phase deep learning method, which they trained using images with artifacts and without a ground truth, in this case, a perfect image without artifacts. Results of the work were published May 19, 2021, in Investigative Radiology.

Deep learning learns directly from the training data how to determine the signal from artifacts and noise, or variations in signal intensity in an image. Many existing deep learning-based MRI reconstruction methods are able to remove artifacts and noise but they learn from a ground truth reference, which can be difficult to obtain.

“In an MRI, it may be easy or hard to scan someone, depending on their physical health, but everyone still has to breathe,” Kamilov said. “When they breathe, their internal organs move, and we have to determine how to correct for those movements.”

In Phase2Phase, the team feeds the deep learning model with only sets of bad images and trains the neural network to predict a good image from a bad one without a ground truth reference.

Weijie Gan, a doctoral student in Kamilov’s lab and a co-first author on the paper, wrote the software for Phase2Phase to remove noise and artifacts. Cihat Eldeniz, an instructor of radiology at MIR and co-first author, worked on the MRI acquisition and motion detection used in the study. They modeled Phase2Phase after an existing machine learning method known as Noise2Noise, which restores images without clean data.

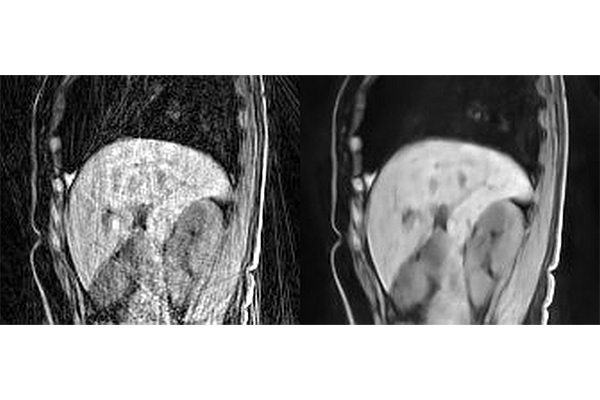

In a retrospective study, the team evaluated MRI data from 33 participants — 15 healthy persons and 18 patients with liver cancer, all of whom were allowed to breathe normally while in the scanner. These results were compared with images reconstructed with another deep learning method, UNet3DPhase, which is trained on a high-quality ground truth; compressed sensing; and multicoil nonuniform fast Fourier transform (MCNUFFT).

In addition, this Phase2Phase method has successfully reconstructed 66 MRI data sets acquired at another institution using different acquisition parameters, demonstrating its broad applicability.

Two radiologists, who were blinded to which reconstruction method was used on the images, reviewed the images for their sharpness, contrast and artifacts. They found that the Phase2Phase and UNet3DPhase images had higher contrast and fewer artifacts than the compressed sensing images. The UNet3DPhase and Phase2Phase images were reported to be sharper than the compressed sensing images by one reviewer, but not by the other. The Phase2Phase and UNet3DPhase images were similar in sharpness and contrast, while the UNet3DPhase images had fewer artifacts. The Phase2Phase images preserve the motion vector fields, while the compressed sensing images artificially reduced the motion vector fields.

“The Phase2Phase deep learning method provides an excellent solution for a rapid reconstruction of high quality 4D liver images using only a fraction of acquisition time,” said An, who also is a professor of neurology. “It improves image quality for better clinical diagnosis.”

The research team and Siemens Healthineers collaborated to develop a work-in-progress (WIP) software. This WIP software was disclosed to the Washington University in St. Louis Office of Technology Management, and a patent application was jointly filed.

Eldeniz C, Gan W, Chen S, Fraum T, Ludwig D, Yan Y, Liu J, Vahle T, Krishnamurthy U, Kamilov U, An H. Phase2Phase: Respiratory Motion-Resolved Reconstruction of Free-Breathing Magnetic Resonance Imaging Using Deep Learning Without a Ground Truth for Improved Liver Imaging. Investigative Radiology, May 29, 2021, doi: 10.1097/RLI.0000000000000792.

This study was funded by Siemens Healthineers and the Washington University School of Medicine Mallinckrodt Institute of Radiology.Click on the topics below for more stories in those areas

- Graduate Students

- Biomedical Engineering

- Electrical & Systems Engineering

- Computer Science & Engineering