WashU Expert: Patients want AI, doctors to work together

Abhinav Jha, philosophers outline framework for integration of machine learning into medical diagnostics

Artificial intelligence (AI) shows tremendous promise in health care.

In PET images of patients with cancer, AI has the potential to outline the boundaries of tumors. It can already piece together data captured from MRI devices to create a picture of your brain and identify antimicrobial resistance when creating new drugs and testing them for adverse interactions.

How would people feel, however, about being diagnosed by an AI system? An interdisciplinary team from Washington University in St. Louis worked with peers from other institutions to probe this question. Their findings were published as a comment paper in the journal Nature Medicine earlier this year.

Would you want to receive a computer-generated email informing you of the precise probability it calculated that you have cancer?

“‘I’m 75.8 percent uncertain.’ That is not something I would think, myself,” said Abhinav Jha, assistant professor of biomedical engineering at the university’s McKelvey School of Engineering. But that is how AI methods with significant promise — known as probabilistic classifiers — report results.

Jha began thinking more deeply about the implications of probabilistic classifiers in medicine after a call from Anya Plutynski, an associate professor of philosophy in Arts & Sciences. As a historian and philosopher of biology and medicine, the complexities of medical screening were not new to her.

“I’d thought about and published on the trade-offs involved in cancer screening before, but there are additional complications associated with AI, which I thought collaborating with Abhinav would help illuminate,” she said. She reached across a considerable academic divide to connect with Jha, who builds AI systems.

Together with Jonathan Birch, from the Centre for Philosophy of Natural and Social Science at the London School of Economics and Political Science, and Kathleen Creel, at the Institute for Human-Centered Artificial Intelligence at Stanford University, the four asked: What do patients want when it comes to AI in medical screening? And what is the best way to accommodate a patient’s values and individual relationship to risk?

With the assistance of Washington University’s patient outreach group, they conducted a small survey, asking patients if they would prefer AI, alone, to make diagnoses, or if they wanted nothing to do with it. Or perhaps they would prefer something in between — a “decision threshold” based on patient preferences and how much risk they are willing to take.

“I was a bit surprised that patients would want AI to be incorporated at all,” Plutynski said. But most of them did, to a degree.

Their paper noted many comments along this line: I see AI as a tool to assist clinicians in medical decisions. I do not see it as being able to make decisions that effectively weigh my personal input or as having the clinical experience and intuition of a good physician.

“Patients are not comfortable with AI being the sole decision maker,” Jha said. “They want humans to be the supervisors, but they do want the AI’s probability calculations to be incorporated into a final decision.”

Most respondents also expressed concern that they would not know the level of uncertainty that AI calculated. They were worried, too, that their values might not be taken into consideration.

The survey helped the team home in on a promising “decision pathway,” a method by which, after patients get screened, they receive their results and move forward.

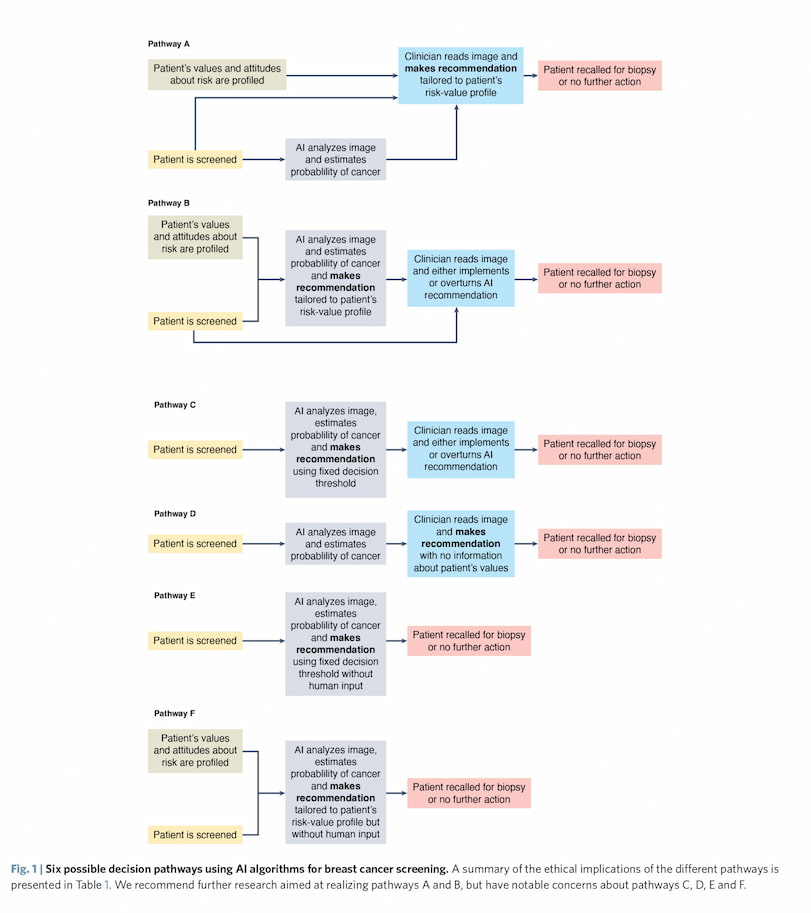

They landed on two scenarios they recommend considering further. In both scenarios, the patient is screened (via mammography, for example) and also takes a risk-value questionnaire. From there, the AI integrates the patient’s screening results and the patient’s risk-value profile, then uses both to make a recommendation — recall the patient for a biopsy or not (this is Pathway ‘B’ in the above image).

The doctor can accept or reject the AI’s recommendation.

Alternately, the patient’s risk-value results are sent to a doctor and the AI calculates a probability of cancer based only on the patient’s screening results. The doctor receives the AI’s results and integrates it with the patient’s profile to make a decision (Pathway ‘A’).

“I think of AI as a source of both risks and opportunities in medicine. If diagnostic judgments are simply outsourced to AI, with no role for human readers at all, it risks eroding patient trust, but AI also has the potential to make diagnosis more patient-centered,” Birch said.

“Even a very skilful human reader can’t fine-tune their diagnostic criteria in ways that reflect this particular patient’s values and preferences — but well-designed AI can,” he said. “Our discussion of six possible decision pathways is an attempt to find that middle ground where patient trust is preserved but the opportunities of AI are seized.”

Challenges remain, with some known, such as bias built into AI algorithms, for example, and some yet to arise. Collaborations such as this one can play an important role in ensuring the expanded use of AI in medical settings is done in a way that makes both patients and clinicians most comfortable.

“I would love to have more collaboration,” Plutynski said. “This is going to have such a huge impact on medical care, and there are all sorts of interesting, hard ethical questions that arise in the context of screening and testing.”

Jha found the work to be validating. “As a scientist in AI, it gives me confidence that patients do want technology to support them. And they want the full array of what that technology can offer.

“They don’t just want to hear, ‘you have cancer,’ or ‘you don’t have cancer.’ If the technology can say, ‘you have a 75.8 percent chance of having cancer, then that’s what they want to know.”

This research was supported by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health (NIH), grants R01-EB031051 and R56-EB028287.