Meta-learning to find every needle in every haystack

Visual active search tool developed by Anindya Sarkar, Yevgeniy Vorobeychik and Nathan Jacobs combines deep reinforcement learning with traditional active search methods

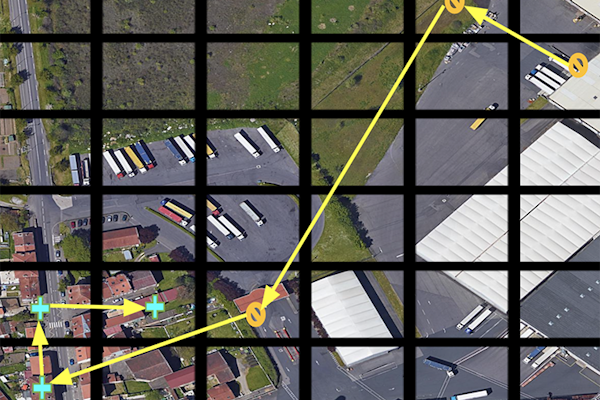

In geospatial exploration, the quest for efficient identification of regions of interest has recently taken a leap forward with visual active search (VAS). This modeling framework uses visual cues to guide exploration with potential applications that range from wildlife poaching detection to search-and-rescue missions to the identification of illegal trafficking activities.

A new approach to VAS developed in the McKelvey School of Engineering at Washington University in St. Louis combines deep reinforcement learning, where a computer can learn to make better decisions through trial and error, with traditional active search, where human searchers go out and verify what’s in a selected region. The team that developed the novel VAS framework includes Yevgeniy Vorobeychik and Nathan Jacobs, professors of computer science & engineering, and Anindya Sarkar, a doctoral student in Vorobeychik’s lab. The team presented its work Dec. 13 at the Neural Information Processing Systems conference in New Orleans.

“VAS improves on traditional active search more or less depending on search task,” Jacobs said. “If a task is relatively easy, then improvements are modest. But if an object is very rare – for example, an endangered species that we want to locate for purposes of wildlife conservation – then improvements offered by VAS are substantial. Notably, this isn’t about finding things faster. It’s about finding as many things as possible given limited resources, especially limited human resources.”

The team’s VAS framework improves on previous methods by breaking down the search into two distinct modules. The framework first uses a prediction module to produce regions of interest based on geospatial image data and search history. Then a search module takes the resulting prediction map as an input and outputs a search plan. Each module can be updated as human explorers return results from physical searches in real time.

“Instead of an end-to-end search policy, decomposing into two modules allows us to be much more adaptable,” Sarkar said. “During the actual search, we can update our prediction module with the search results. Then the search module can learn the dynamics of the prediction module – how it’s changing across search steps – and adapt. In this meta-learning strategy, the search module is basically learning how to search. It’s also human interpretable, so if the model isn’t working properly, the user can check it and debug as needed.”

The major strength of the framework comes from its ongoing incorporation of two levels of deployment: the computational model predicts where to search, then humans go out into the world to conduct the search. The human component is vastly more expensive in terms of time and other resources required to explore large geospatial areas, so it makes sense to adapt and optimize the computer-generated search plan for a maximally efficient search.

Adaptability in the computer model is especially important when the object sought varies drastically from the objects the model is trained on. Experimental results showed marked improvement by Sarkar, Vorobeychik and Jacobs’ proposed VAS framework over existing methods in various visual active search tasks.

Sarkar A, Jacobs N, and Vorobeychik Y. A partially supervised reinforcement learning framework for visual active search. Neural Information Processing Systems (NeurIPS), Dec. 10-16, 2023. https://doi.org/10.48550/arXiv.2310.09689

This research was supported by the National Science Foundation (IIS-1905558, IIS-1903207, and IIS-2214141), Army Research Office (W911NF-18-1-0208), Amazon, NVIDIA, and the Taylor Geospatial Institute managed by Saint Louis University.